Demand Paging Overview

Demand paging is a technique where content appears to be present in RAM, but may in fact be stored on some external media and is transparently loaded when needed.

Purpose

Demand paging trades off increased available RAM against increased data latency, increased media wear and increased power usage. Overall performance may be increased as there can be more RAM available to each application as it runs.

Demand paging is used to reduce the amount of RAM that needs to be shipped with a device and so reduces the device cost.

Description

Demand paging relies on the fact that most memory access is likely to occur in a small region of memory and not spread over the entire memory map. This means that if only that small area of memory is available to a process (or thread) at any one time, then the amount of RAM required can be reduced. The small area of memory is known as a page. The working RAM is broken down into pages, some of which are used directly by the processor and the rest are spare. Since Symbian platform is a multi-tasking operating system, there is more than one page in the working RAM at any one time. There is one page per thread.

There are three types of demand paging: ROM paging, code paging and writable data paging. All of these types of demand paging have some features in common:

A free RAM pool

Working RAM

Paging Fault Handler

A source of code and/or data that the processor needs to access.

With demand paging, the processor requires content to be present in the working RAM. If the content is not present, then a 'paging fault' occurs and the required information is loaded into a page of the working RAM. This is known as "paged-in". When the contents of this page are no longer required, then the page returns to the pool of free RAM. This is known as "paging-out".

The difference between the types of demand paging is the source that is to be used:

For ROM paging, it is code and/or data stored under the ROM file system

For code paging, it is code and/or data stored using ROFS file system

For writable data paging, it is the writable data stored in RAM, for example user stacks and heaps. In this case, the data is moved to a backing store.

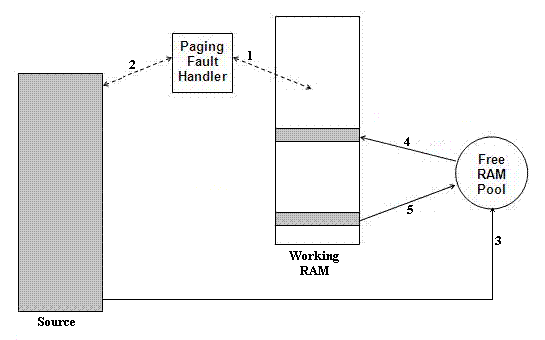

The diagram above shows the basic operations involved in demand paging:

The processor wants to access content which is not available in the working RAM, causing a 'paging fault'.

The paging fault handler starts the process for copying a page of the source into a page of RAM.

A page is selected.

The contents of the source is loaded into the page and this becomes part of the working memory.

When the contents of the page are no longer required, the page is returned to the free RAM pool.

Demand Paging limitations

The following are known limitations of Demand Paging:

The access time cannot be guaranteed.

There is an orders of magnitude difference in the access time between an access where no page fault occurs and one where a page fault occurs. If a page fault does not occur, then the time taken for a memory access is in the tens to hundreds of nanosecond range. If a page fault does occur, then the time taken for a memory access could be in the millisecond range

Device drivers have to be written to allow for data latency when a page fault occurs.

Copyright ©2010 Nokia Corporation and/or its subsidiary(-ies).

All rights

reserved. Unless otherwise stated, these materials are provided under the terms of the Eclipse Public License

v1.0.