Graphics Composition

Composition is the process of putting together the output elements from various different sources to create the screen display that the end user sees on the device.

Variant : ScreenPlay.

In a multi-tasking device many of the activities taking place simultaneously generate output for display on the screen. Their output can include words, pictures, video, games and the screen furniture (scroll bars, buttons, icons, borders, tabs, menus, title bars) familiar to every computer user.

Many of these output elements can appear at the same time, either next to each other or overlapping each other. They can be opaque, such that they obscure anything behind, or semi-transparent such that the elements underneath are partially visible.

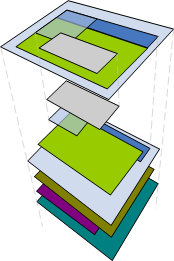

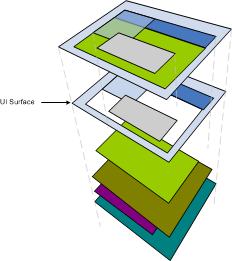

The diagram below illustrates how the display that the viewer sees (looking down from the top) is a two-dimensional representation composed from a series of layers.

Composition requires:

-

Calculations based on the size, position, visibility, transparency and ordering of the layers to determine what will be displayed. This is a logic exercise referred to as UI Composition.

-

Handling of image content, which is a data processing exercise referred to as Image Composition.

For this reason the two are handled separately.

UI Composition

UI Composition is performed by the Window Server. Each application has its own window group containing all of its child windows. The Window Server keeps track of the windows' positions, sizes, visibilities, transparencies and z-order and is able to establish which windows, and which bits of each window, are visible. It ensures that each visible bit of window is kept up-to-date by calling its application when necessary.

Image composition

Prior to the introduction of ScreenPlay the Window Server did rudimentary composition in its main thread and rendered composited output to the screen buffer using the GDI (Graphics Device Interface). To achieve high frame rates, applications typically bypassed the Window Server using Direct Screen Access (DSA) and animation DLLs.

In ScreenPlay sources that generate complex graphical output render directly to graphics surfaces. The Window Server delegates the composition of surfaces to a composition engine that device creators can adapt to take advantage of graphics processing hardware.

Although the composition engine runs in the Window Server's process, it is largely transparent to application developers and works quite differently. Instead of using windows, it uses surfaces to store pixel data and elements to manipulate size, position, z-order, visibility and transparency.

While an element is a simple lightweight object, and easy to manipulate, a surface stores a large amount of data and its handling requires more consideration.

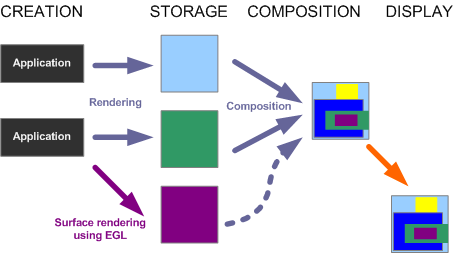

The diagram below is a simplistic representation of how applications create output which is rendered, composited and displayed.

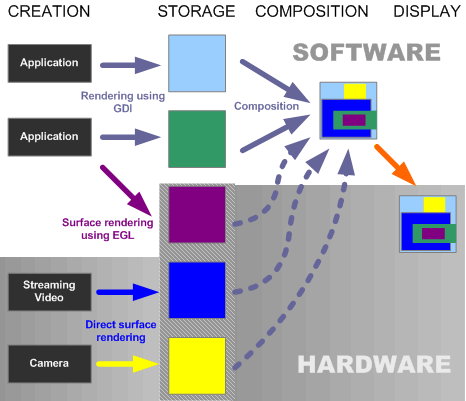

In a device with graphics acceleration hardware (a Graphics Processing Unit or GPU) there might, in addition to the memory managed by the CPU, be additional memory managed by the GPU. Image data may therefore be considered to have been software rendered (onto a surface in CPU memory) or hardware rendered (onto a surface in hardware accelerated GPU memory). The following diagram shows applications and other graphical data sources rendering to surfaces in software and hardware.

The diagram, however, represents a system with several problem areas that would render it unsuitable for any practical implementation

-

In practice it is likely that once data has been rendered to hardware-managed memory it is, to all intents and purposes, unavailable to software: the CPU is unable to access it sufficiently quickly. The dotted paths on the diagram above must therefore be avoided.

-

Furthermore, GDI rendered data (the UI) is typically 'stored' as redrawing instructions rather than bitmapped pixel data—so rendering it to bitmaps before composition is likely to use a lot of memory unnecessarily.

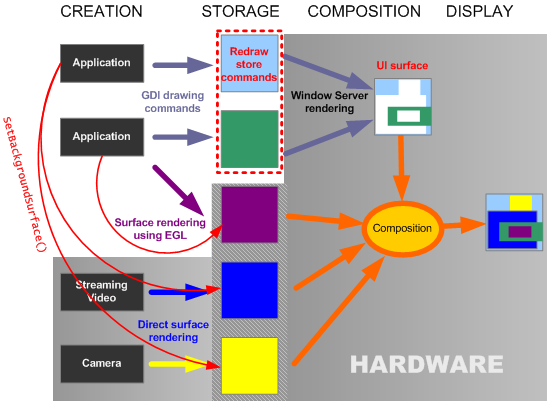

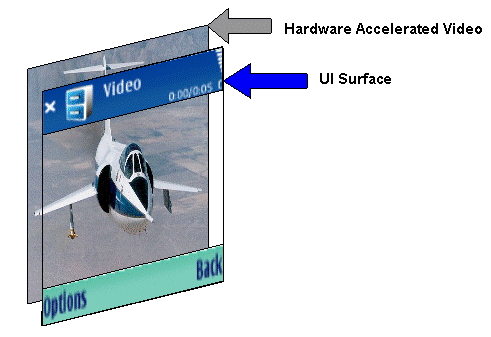

In ScreenPlay, the UI is therefore composed and rendered onto a single surface before being composited with any other surfaces. This surface is termed the UI surface and is displayed on a layer placed in front of all of the others. In fact, the UI surface is created for the Window Server during system start up and is then passed to the composition components as a 'special case' surface for composition.

Combining Window Server and Composition

The compositing of surfaces according to their origin means that the physical composition process behaves differently from the logical composition process that is based on what the user and the UI are doing. Logically the windows in the UI and memory rendered surfaces may be on layers that are interleaved yet the memory rendered surfaces are physically composed behind.

ScreenPlay addresses this issue by associating 'external' surfaces with windows in the Window Server using the RWindowBase::SetBackgroundSurface() method. This means that the Window Server is able to include them in its logical composition and make provision for them during data composition. A window with its background set to a surface in this way becomes transparent in the UI rendered UI surface.

In most cases this is not apparent to the viewer. Surfaces that are physically composed behind the UI appear in the correct position on the two-dimensional display. The diagram below illustrates how Window Server-rendered UI content and external surfaces are composited using the UI surface.

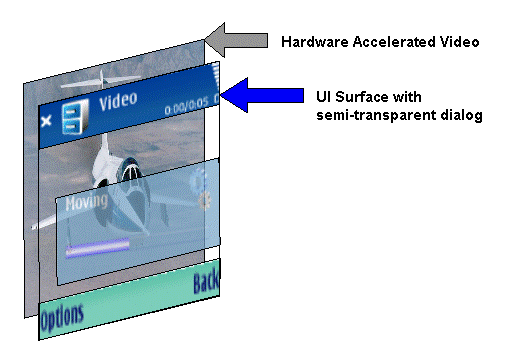

Although this method of composition is flexible and powerful, it does have some limitations, particularly with respect to semi-transparent hardware-accelerated surfaces.

It is not possible, for example, to include a semi-transparent surface in front of the UI surface. In such cases the composition engine determines how the display is composed.

Here is a second version of the diagram at the top of the page showing how the same composition might be achieved in practice. The UI menus, windows and dialogs are composited by the Window Server onto a single surface. The light green layer displays a hardware rendered surface so it is actually behind the layer on which it appears.

Copyright ©2010 Nokia Corporation and/or its subsidiary(-ies).

All rights

reserved. Unless otherwise stated, these materials are provided under the terms of the Eclipse Public License

v1.0.