Graphics Composition Collection Overview

This topic provides an introduction to the Graphics Composition collection.

Variant: ScreenPlay. Target audience: Device creators.

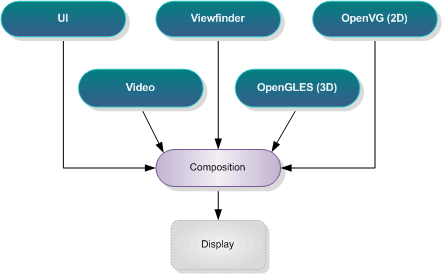

In the context of ScreenPlay, composition is the process of combining content, possibly from several different sources, before it is displayed. For example, the following figure represents a scenario where content from the camera viewfinder, video, and OpenVG 2D and OpenGL ES 3D games are brought together with the UI content and then displayed on the screen. This process of bringing the content together is called composition. Some of the incoming content may be produced by hardware renderers and the composition itself may be hardware accelerated.

In ScreenPlay, sources that generate complex graphical output (such as the camera viewfinder, video, and OpenGLES and OpenVG games) can render directly to composition surfaces. These are essentially pixel buffers with associated metadata describing the width, height, stride and pixel format. In the Symbian implementation, surfaces are implemented using shared chunks, which are memory regions that can be safely shared between user-side and kernel-side processes.

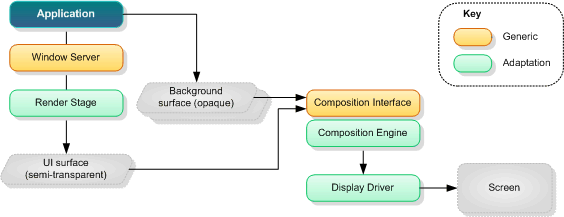

The Window Server's render stage plug-ins render all of the UI content onto a special surface that is known as the UI surface. This is semi-transparent—unlike the background surfaces, which are opaque. The following diagram shows the composition engine bringing together the UI surface and the background surfaces onto the frame buffer, which the Display Driver then displays on the screen.

The composition engine maintains a stack of scene elements or layers (which describe the geometric positions, size and orientation), computes what is visible and performs the actual composition work.

For a general introduction to some of the key composition concepts, see Graphics Composition and Scene Elements.

Components

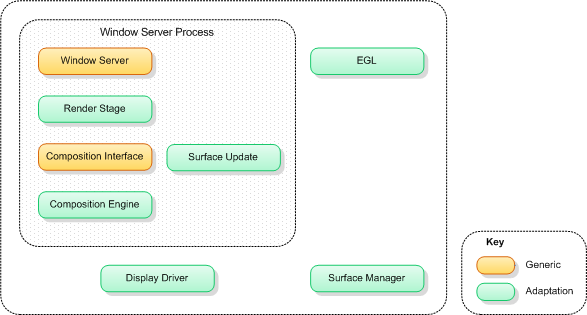

The following diagram shows the composition interface and engine and related components and indicates which are generic parts of the Symbian platform and which can be adapted by device and hardware manufacturers.

Here we will look briefly at the role of the key components.

The composition engine maintains the stack of elements and computes what is visible. For example, it culls invisible areas and maintains a list of dirty rectangles. The composition engine also performs the actual composition work, blending the pixels if necessary. It supports limited transformation, such as scaling and rotation (in 90° increments). It can utilize GPU hardware composition and LCD hardware rotation if they are available. The Symbian Foundation provides a reference implementation based on the OpenWF Composition (OpenWF-C) APIs defined by the Khronos Group.

The Surface Manager creates and manages surfaces. As mentioned above, in the Symbian implementation, surfaces are implemented as shared chunks because they must be accessible by user-side processes and the kernel and composition hardware. Because shared chunks can only be allocated on the kernel side, the Surface Manager is a logical device driver (LDD) and kernel extension. Surfaces can be multi-buffered and are identified by a 128 bit identifier (called the surface ID).

The Surface Update Server provides a communication mechanism between the composition engine and its clients. This is particularly useful for clients (such as video) that produce fast updates and use multi-buffered surfaces. The Surface Update Server sends back to the client notification of which surface and which buffer within a multi-buffered surface was used for the last composition operation. The client can then start updating the buffer without risk of tearing or artefacts.

Copyright ©2010 Nokia Corporation and/or its subsidiary(-ies).

All rights

reserved. Unless otherwise stated, these materials are provided under the terms of the Eclipse Public License

v1.0.