Advanced Audio Adaptation Framework Technology Guide

This document provides additional information about the Advanced Audio Adaptation Framework.

Purpose

The Advanced Audio Adaptation Framework (A3F) provides a common interface for accessing audio resources, playing tones, and configuring audio for playing and recording.

Understanding A3F

A3F functionality is provided by the CAudioContextFactory class. CAudioContextFactory is responsible for creating audio contexts. The Audio Context API ( MAudioContext ) maintains all audio components created in a context, where audio components are instances of the MAudioStream and MAudioProcessingUnit classes.

An MAudioProcessingUnit is created in a context by giving the type of the processing unit such as codec and source sink. MAudioProcessingUnit allows extension interfaces to set additional audio processing unit settings.

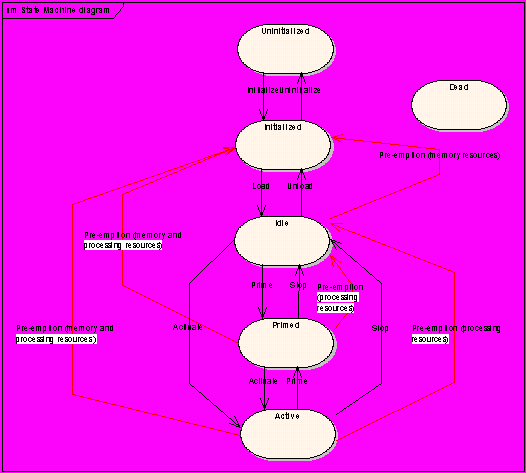

The Audio Stream API ( MAudioStream ) is an audio component that links together processing units into an audio stream. MAudioStream manages the audio processing state requested by the client and interacts with the audio processing units added to the stream. MAudioStream provides runtime control over a stream's (composite) behaviour and lifetime management of the entities in a stream (whether the state of the stream is uninitialized, initialized, idle, primed, active or dead). For more information, see Stream States .

The Audio Context API allows a grouping notion for multimedia allocations. MAudioContext groups component actions made inside a context. This means any allocation or loss of resources which occurs in any single audio component affects all of the components grouped in the context.

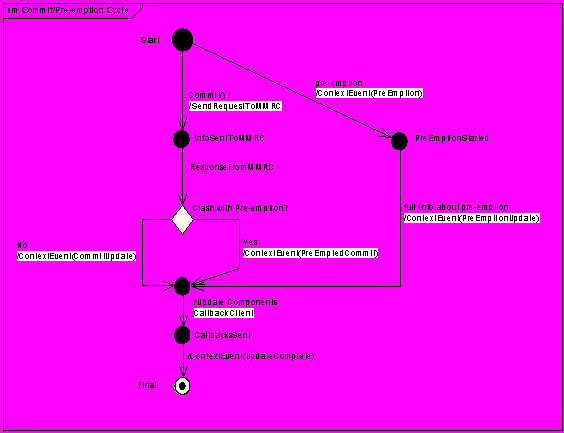

Changes made to an audio stream are handled in a transactional manner. If changes fail to be applied, then any other changes made to the stream are considered to fail also. The application of changes is dependent on a successful commit cycle. If there is a failure, a “rollback" mechanism is executed and the stream states are reverted to their previous state.

The client requests changes through various Set() calls and then calls the MAudioContext::Commit() function. The platform-specific implementation then decides whether the Commit() request is allowed, possibly dictates new values if needed, and then applies the changes. If successful, the client is informed through callbacks. If there is a need to modify the context because resources are needed in another context, then the platform-specific implementation can take the resources by pre-empting the context. In this case, the client is informed about the pre-emption.

The following diagram shows this behaviour:

Observers

Most of the A3F API functions are asynchronous. For example, the functions Foo() or SetFoo() are followed with FooComplete(Tint aError) or FooSet(Tint aError) .

The following A3F observers are defined:

-

Informs with ContextEvent() about the commit cycle. Note that ContextEvent() is in the same thread context as the A3F client.

-

Informs with StateEvent() about state changes

-

Informs about completion of setters and getters.

-

Informs about changes in gain or changes in maximum gain values.

-

Informs about any additional errors in audio processing units.

-

Informs about recorded buffer to be ready for storing. Also informs the client if there is a buffer to be ignored.

-

Informs about new buffer ready to be filled with audio data. Also informs the client if any requested buffer should be ignored.

Clients using A3F should be aware that requested audio resources can be lost at any time while they are being used. A client with a higher importance can cause requests for resources to be denied or available resources to become unavailable. In these cases, the commit cycle informs the client through pre-emption events and the resulted stream state is usually demoted to the highest non-disturbing state.

The possible audio stream states are described in the following table:

| State | Description |

|---|---|

|

EUninitialized |

This is the state of the chain before it is initialized. However, the settings in the logical chain cannot be related to the adaptation because no adaptation has been selected yet. |

|

EInitialized |

This state is set after a successful initialization request. The physical adaptation has been selected but may not be fully allocated. There should be no externally visible buffers allocated at this point Note : For zero copy and shared chunk buffers, a stream in the EInitialized state should not require buffer consumption. This is an important issue if the base port only has 16 addressable chunks per process. In terms of DevSound compatibility, some custom interfaces are available at this point (although others may require further construction before they are available). |

|

EIdle |

All the chain resources are allocated. However, no processing time (other than that expended to put the chain into the EIdle state) is consumed in this phase. In the EIdle state, any existing allocated buffers continue to exist. The codec is allocated in terms of hardware memory consumption. A stream in the EIdle state can be ‘mid-file position’. There is no implied reset of position or runtime settings (that is, time played) by returning to EIdle . |

|

EPrimed |

This state is the same as EIdle but the stream can consume processing time by filling its buffers. The purpose of this state is to prepare a stream such that it is ready to play in as short a time as possible (for example, low-latency for audio chains which can be pre-buffered). Note that once the buffer is full, the stream may continue to use some processing time. There will not be an automatic transition to the EIdle state when the buffer is full. If such behaviour is desired, the client must request it. There will not be an automatic transition to EActive when the buffer is full. If such behaviour is desired, the client must request it. |

|

EActive |

The chain has the resources as EIdle and EPrimed but also has started to process the actions requested by the client. Note: A chain can be in the EActive state and performing a wide range of operations. However the semantics are that it is processing the stream and that it is consuming both processing and memory resources. |

|

EDead |

The stream can no longer function due to a fatal error. The logical chain still exists, but any physical resources should be reclaimed by the adaptation. |

The following diagram shows the stream states:

A3F Tutorials

The following tutorials are provided to help you create A3F solutions:

Copyright ©2010 Nokia Corporation and/or its subsidiary(-ies).

All rights

reserved. Unless otherwise stated, these materials are provided under the terms of the Eclipse Public License

v1.0.